Describing and understanding the world:

from probability and statistics to field theory

Preprint of a chapter published in T. Archibald & D. E. Rowe, eds, A Cultural History

of Mathematics, Volume 5: A Cultural History of Mathematics in the

Nineteenth Century, London, Bloomsbury, 2024

The final quarter of the eighteenth century saw its share of revolutions. The North-American colonies of the English Crown rebelled, and with the timely assistance of French troops and naval forces, they gained their independence, giving way to the United States of America. A few years later, across the Atlantic, King Louis XVI of France was guillotined, making way for the first French Republic. Among the changes brought about by the new French state was a closer alignment of science and technology with state interests, including the establishment of a variety of standards enforced by the power of the state. Among the most celebrated of the new French standards was the metric system of decimalized weights and measures. The meter, gram, and liter were prescribed, with the assistance of members of the Paris Academy of Sciences, for the measurement of length, mass, and liquid volume.

While French leaders had hoped that Britain and the United States would adopt the metric system, their proposal was flatly refused. Nonetheless, employment of the metric system became a legal obligation in France in 1795, setting in motion the standardization of units of measurement in science and commerce that expanded both in purview and in practice over the next two centuries. Scientists at the Paris Academy of Sciences came up with the first metric system, and in the 19th century, German and British scientists would help extend the system, the utility of which for communicating scientific results was largely recognized.

The early years of the French Republic produced important institutional changes in the organization of science and technology, including the foundation of the École polytechnique, staffed with some of France’s most illustrious savants, and the Bureau of Longitudes, likewise staffed, and charged with publishing ephemerides for use in maritime navigation. In 1800, a Bureau of Statistics was created under the French Ministry of the Interior, and charged with quantifying selected items in topography, population, social situation, agriculture, industry and commerce. The Republic was soon overthrown by Napoleon, who had great plans for both the French state, and for all of Europe, involving military conquest, but also a thorough reform of the legal system: the Napoleonic Code. In this latter respect, Napoleon saw himself as realizing one of the primary objectives of the French Revolution: to enact a single code of law for the entire nation. In France, this legal reform was realized by a centralized government bureaucracy.

These innovations in standardization of measurement and bureaucratic centralization realized in the first two decades of the post-revolutionary French state were meant to bring about greater prosperity and well-being to the populace at large. But how were prosperity and well-being themselves to be measured? An Enlightenment thinker, Jean-Jacques Rousseau, had suggested in his influential book On the Social Contract (1762) that counting heads is a metric for good government:

The government under which …the citizens do most increase and multiply, is infallibly the best. Similarly, the government under which a people diminishes in number and wastes away, is the worst. Experts in calculation! I leave it to you to count, to measure, to compare. (Rousseau, cited from Porter 1986, 2)

One might take issue with the criterion proposed here by Rousseau, and indeed, in 1798, Thomas Malthus suggested that an unchecked increase in human population size would lead to greater misery. There was, nonetheless, at the turn of the nineteenth century a strong belief in the capacity of the government to direct its resources more effectively, based on precise statistical data, which led it to expend more and more resources on the collection of such data.

If the origins of what the historian T.M. Porter called the “great statistical enthusiasm” of the early nineteenth century (Porter 1986, 27) are to be found in France, the gathering of statistical data was also gaining momentum in Britain. Part of the motivation for this movement was provided by the Napoleonic Wars, as accurate census data were required in order to obtain satisfactory results from orders of conscription designed to expand the armed forces. In part, British data-collection efforts were motivated by the social dislocation brought about by industrialization, and a concomitant fear of a French-style revolution on British soil. In Britain, as in France, the “experts in calculation” took an interest in the numbers produced by the new data-collection schemes. Led by Malthus, Charles Babbage, and others, a statistical section was formed by the British Association for the Advancement of Science in 1833.

Interpreting statistics and calculating probability

It is one thing, of course, to collect statistical data, but quite another to extract useful knowledge from that data once collected (Stigler, 1986). While the problem is a very general one, it was posed in a clear manner not in the domain of military conscription or social welfare, but in astronomy, which was undergoing a revolution of sorts after William Herschel’s discovery, in 1781, of a previously-unseen planet in the Solar System: Uranus. Using a home-made reflecting telescope, Herschel (1738–1822), a German-born orchestra director and amateur astronomer in Bath, was compiling a catalog of celestial objects that had not received much attention: double stars. One night in March, he noticed an object in the sky that he took at first for a comet. Successive sightings, however, revealed the object to be a planet, bringing the number of known planets in the Solar System to seven – the first such addition to the Solar System in recorded history (Hoskin, in Taton & Wilson 1995, 193). Herschel’s discovery reinforced his scientific reputation, and he soon secured a position as a professional astronomer under the patronage of King George III.

Herschel’s curiosity extended far beyond the observation of double stars. With the able assistance of his sister Caroline Herschel (1750–1848), he took up another neglected celestial object: nebulæ. Over the next two decades, Herschel published catalogs of some 2500 nebulæ and clusters, grouped in eight categories distinguished by apparent luminosity and structure. Caroline, like her brother William, benefited from royal patronage; she was the first woman to be so honored, and provided a brilliant example for other women to follow. William, in connection with his observations, calculated the relative motion of the Solar System among the stars, and used the result to build the first model of the structure of the Milky Way. The idea that the sidereal universe has a structure at all, which is to say, that stars are not randomly distributed, was advanced earlier by John Michell, in the first application of probabilistic reasoning in astronomy. The chance against an observed grouping of stars – the Pleiades constellation of six bright stars – being a random occurrence, Michell argued, is several billion to one. Some cause ruled the heavens, and for Michell, Newton’s universal law of gravitation was a good candidate. The contributions of Michell, Herschel and others in the late eighteenth century effectively transformed the nature of astronomy, from a science based on the observation of the position of planets and comets in the Solar System, to one whose purview was the entire sidereal universe (North, 2008).

Questions concerning the stability of the Solar System and of the sidereal universe were renewed following Herschel’s discoveries. Isaac Newton (1643–1727) inaugurated such analyses with calculations of the secular perturbations of planetary orbits, which led him to believe that divine intervention was required in order to hold the Solar System together. As for the universe, Newton wondered how it could possibly be stable under universal gravitation. In correspondence with the classical scholar and cleric Richard Bentley, Newton agreed that in the case of an inhomogeneous distribution of matter, only a divine power could prevent the collapse of the universe.

The eighteenth century saw far-reaching progress in the sophistication of the tools of mathematical analysis, and by the turn of the nineteenth century, the question of the stability of the Solar System was ripe for reevaluation by Pierre-Simon de Laplace (1749–1827). The conclusion of Laplace’s analysis was diametrically opposed to that of Newton, although he assumed with Newton that the dynamics of the Solar System depend only on the central force of gravitation. Laplace found the Solar System to be in a stable state, requiring nothing other than gravitation and inertial forces to keep the planets on course. Apparently, advanced mathematics had eliminated Newton’s proof of the existence of God, although it seems unlikely that this was ever Laplace’s intention.

Laplace, like many of his contemporaries in the Enlightenment, was a keen student of mathematical probability, a subject he took up in the 1770s. In 1812 he published a work –the Analytical Theory of Probability – which became a standard reference for nineteenth-century mathematical probability, and still defines classical probability theory. With Laplace, two grand ideas come together. First, there is the idea that nothing happens without a cause. The philosopher from Königsberg, Immanuel Kant (1724–1804), had written as much in his master work, The Critique of Pure Reason (1781): “All that happens is hypothetically necessary”, and “Nothing happens through blind chance”. Although Kant’s theory of the foundations of knowledge in his Critique was broadly inspired by the success of Newtonian mechanics, Kant did not rely on this success to justify his negative view of chance events.

If nothing ever happens by chance, what is probability about? For Laplace, all that happens, happens because of natural law, even if we are unable to ascertain, in certain cases, how this comes about. This is where the laws of probability came into play. We can be certain that everything happens for a reason, Laplace wrote in the second edition of the Théorie analytique, because of the “regularity that astronomy shows us in the motion of the comets”. The resounding success of celestial mechanics, in other words, extends to all phenomena, including, for Laplace, social phenomena.

It was no accident that Laplace picked out the motion of comets as his prime example of the descriptive power of Newtonian mechanics (Stigler, 1999, 56). Cometary orbits can be quite unusual, unlike those of the planets. While the latter all follow slightly-eccentric elliptical paths lying roughly in the same plane of the Solar System, comets are found in highly oblique planes, and undergo strong perturbations when in the vicinity of planets or the Sun.

Following Herschel’s discovery of Uranus, astronomers armed with new and more powerful telescopes were on the lookout for an eighth planet, which they thought would most likely be found in the space between Mars and Jupiter. The known planets all respected the empirical law of Titius-Bode, according to which the semi-major axis of the orbit , measured in astronomical units, is given by the relation , where , 0, 1, …, such that the value of is fixed by the sequential order of the planet, with one exception: there seems to be a planet missing between Mars () and Jupiter (). An early form of the law was suggested in 1715, and most remarkably, the unexpected discovery of Uranus () served to confirm the law.

The hunt was on for the Solar System’s eighth planet, and in 1801, an astronomer in Palermo, Giuseppe Piazzi (1746–1826) thought he had sighted it, and he gave it a name: Ceres Ferdinandea, in honor of his patron, King Ferdinand IV of Naples and Sicily. The problem was that Piazzi was only able to obtain a few sightings before the object (which in public he called a comet, to be on the safe side) moved into the Sun’s glare, rendering impossible further observation. There was no analytical method available to determine the orbit of an object based on such slim data, and it was feared that Piazzi’s comet would be lost. A young mathematician and astronomer in Braunschweig, Carl Friedrich Gauss (1777–1855) learned of the situation, and on the basis on Piazzi’s measurements, which covered only three degrees in a geocentric frame, he went to work on a new method for determining the orbit. Three months later, Gauss predicted the return of the object, roughly a year after its disappearance, to within a half a degree of its true position. Gauss’s exploit was widely hailed, although Herschel refused to consider Ceres as the eighth planet, less on account of its modest diameter, measured by him at 261 kilometers, than on the inclination of its orbit with respect to the ecliptic, measured at thirty-four degrees. Instead, Herschel proposed Ceres as the first “asteroid”.

It is likely that Gauss used the method of least squares on this occasion, in order to determine the “true” path of Ceres from the raw angular measurements of Piazzi. The method of least squares was first published in 1805 by Adrien-Marie Legendre (1752–1833) in an appendix to a work on the determination of cometary orbits, but Gauss later claimed that he was in possession of the method in 1795 – a claim that irked Legendre considerably. Legendre had in fact given a straightforward, and easily understood presentation of a method applicable to a wide variety of problems in which multiple observations are to be combined. The question of the accuracy of the method, however, was not broached by Legendre at the time.

Gauss, in his own presentation of the method of least squares, showed how probability entered the picture, thus affording a means of judging the accuracy of the method of least squares. In modern terms, Gauss sought the form of probability density and estimation method that lead to the arithmetic mean as estimate of the location parameter. To do so, he availed himself of the works of Laplace, including Laplace’s form of Bayes’s theorem, and derived what was later called a normal, or Gaussian distribution. Naturally, Laplace read Gauss’s work with great interest, and noticed its relevance for his own recent proof of an early version of what is known today as the central limit theorem, generalizing De Moivre’s approximation of the binomial theorem. Thanks to his reading of Gauss, Laplace now understood the connection between the central limit theorem and linear estimation. Unlike Gauss, Laplace justified the use of the method of least squares by its minimization of the posterior estimated error for a large number of trials, and pointed out that the normal error distribution leads to the arithmetic mean as the posterior mode, and is the only differentiable, symmetric distribution to do so. What Laplace’s new understanding amounted to, was that if each measurement were considered to be the average of a large number of independent components, the distribution of such measurements would be normal.

By 1812, Laplace had brought together two currents of mathematical thinking: the combination of observations via aggregation of linear equations, on the one hand, and on the other, the assessment of uncertainty via mathematical probability. This intellectual and formal fusion opened up broad vistas for application of mathematical statistics, but it was appreciated at first only by those working in mathematics and the exact sciences of astronomy, geodesy, and physics. Even in these disciplines, where the ideal of precision measurement was promoted, the use of statistical methods was largely limited to the domain of error correction via least-squares linear regression, to the neglect of uncertainty analysis.

Fourier’s theory of heat propagation

One physicist who quickly grasped the importance of Laplace’s breakthrough was his former student at the École normale supérieure, Joseph Fourier (1768–1830). A former prefect, after Napoleon’s downfall, Fourier was named director of the Bureau of Statistics of the Seine, and soon thereafter, he was elected to the Paris Academy of Sciences. From 1821 to 1829 the Bureau of Statistics published four volumes, containing both data and methods for their analysis. These methods were not new to mathematicians, but were published for the benefit of a broader public unfamiliar with higher mathematics. For example, one finds in a volume published in 1826 (reprinted in Fourier’s Œuvres), a technique for evaluating hypotheses with respect to a certain “standard of certainty.” This proposes a formula for the degree of approximation of the calculated mean to the true mean, which differs by a factor of from the modern definition of standard deviation.

Fourier also drew on Laplace’s theory of probability in the course of his celebrated treatise, The Analytical Theory of Heat (1822). This work represented a clean break, not only with Biot’s steady-state theory, but with the style of physical reasoning associated with Laplace and his disciples, issuing from celestial mechanics and Newtonian optics. Laplace expected all natural phenomena, from the microscopic scale to that of the universe, to obey a central force law on the model of Newton’s universal law of gravitation, but admitting forces both attractive and repulsive (for more on this approach, see chapter 4). Biot’s account of heat diffusion was of just this sort. In its stead, Fourier favored a phenomenological approach, wherein a few choice facts are selected for mathematical generalization in the form of linear partial differential equations, boundary conditions are imposed, analytical solutions are sought and compared to observation. Fourier’s theory of heat is distinguished by its very generality, in that it contains no assumptions about the structure of matter. In consequence of the theory’s generality, its scope of application was huge, and in this sense, it is considered by commentators as constituting a veritable cosmology of heat, distinct from the mechanical cosmology of previous centuries.

It often happens in theoretical physics that the task of formulating a mathematical model of a given phenomenon turns out to be somewhat simpler than solving the equations. This was true for Fourier in 1811, when he proposed an equation for heat flow in solids with a form recalling that of the wave equation:

where is the temperature of the medium, is time, is thermal conductivity, is specific heat, and is the density of the medium (Grattan-Guinness, 1990). Finding solutions for solids of definite shape and temperature profiles can be a challenge, and here, Fourier proposed several new and powerful analytic methods, including dimensional analysis, and what was later known as Fourier analysis, involving harmonic distributions (on these methods, see Chapter 3). Fourier showed how the initial temperature distribution of a body can be modeled as pure surface effects, giving rise to a solution of the form , describable in terms of the linear sum of a series of sine and cosine waves, the coefficients of which are fixed by the geometry. This Fourier series expansion of the solution is inserted into the heat equation. Uniqueness of Fourier series guarantees that the coefficients on each side of the equation are equal, resulting in an ordinary differential equation, independent of time and easy to solve for the coefficients. While there are an infinity of coefficients in all but the most trivial cases, often calculation of the first few harmonics suffices to determine the temperature at any given position. Fourier’s analytical method proved to be quite general, finding application in classes of physical and mathematical problems characterized by linear differential equations with constant coefficients, including electrostatics (§ Field Theory).

In addition to providing a powerful analytical framework for mathematical physics, Fourier’s theory of heat flow proved to be suggestive in a qualitative sense, in several branches of physics, beginning with electricity and magnetism. The invention of the Voltaic pile by Alessandro Volta (1745–1827) at the turn of nineteenth century gave a huge boost to experimentation in this domain, which led, in turn, to the discovery of a series of surprising effects. Perhaps the most surprising new effect was the one discovered by the Danish physicist Hans Christian Ørsted (1777–1851) in 1820, whereby an electric current though a wire produces a circular magnetic force. Ørsted’s discovery unified electricity and magnetism, and led rapidly to the foundation of electrodynamics by André-Marie Ampère (1775–1836), and to the theory of the electromagnetic field, conceived of by Michael Faraday (1791–1867); see Steinle (2016). In London, Humphry Davy (1778–1829) found the conducting power of metal wires to be in inverse proportion to wire length, and in direct proportion to cross-sectional area. He found also that conductivity varied inversely with wire temperature. In Berlin, Georg Simon Ohm (1787–1854) extended Davy’s investigations, studying the effect of varying the driving-power of a Voltaic battery, or what was later known as voltage. In doing so, Ohm supposed that electricity propagates along a wire from one particle to the next, and that the magnitude of this flow is proportional to the voltage difference between adjacent particles, just as in Fourier’s theory, the flow of heat between two points depends on the temperature differential. Ohm’s experiments led him to advance his eponymous law (1827) relating electric current to voltage and wire resistance in a circuit.

Adolphe Quetelet’s social physics

The science of probability was an integral part of Fourier’s cosmology, a fact reflected by the faith he placed in the validity of the normal distribution for representing not just errors, but natural frequencies. For example, Fourier considered a physical model in which heat is concentrated in one point of an infinite, one-dimensional conductor. Based on his heat equation, Fourier calculated the resulting distribution, which was just the normal distribution. From an epistemological standpoint, since both the heat equation and Laplace’s binomial derivation of the normal distribution lead to the same result, both are equally confirmed by experimental observation in this instance, such that a preference for one explanation over the other is a matter of taste.

The success of the probabilistic approach was suggestive to Fourier, who sought a molecular representation of the nature of heat. “Molecules separated from one another”, he wrote in 1822, “communicate their rays of heat reciprocally across airless space, just as shining bodies transmit their light.” Heat dispersion, according to Fourier’s model, is the result of rays transmitted by contiguous molecules, in conformity with his heat equation. Heat flow, he concluded, “then results from an infinite multitude of actions whose effects are added”. Such a view of molecular action naturally lent itself to a great variety of physical phenomena, and not just those concerning heat dispersion. Likewise for the Gaussian law of error, which Fourier suggested was “imprinted in all of nature – a pre-existent element of universal order” (Fourier (1819), cited by Porter, 1986, 99).

Fourier’s mathematical approach to physics deeply impressed scientists in France and throughout Europe. One of these scientists was a Belgian named Adolphe Quetelet (1796–1874). Born and educated in Ghent when this city was a part of France, Quetelet had great admiration for the accomplishments of the French mathematicians Laplace, Lacroix, Fourier and Poisson. Soon after he obtained a Ph.D. in mathematics, Quetelet was sent to the Royal Observatory in Paris for three months to obtain instruction in the finer points of astronomy. When he returned to Brussels in 1823, he brought with him an appreciation – and a certain enthusiasm – for the science of statistics.

In Paris, Fourier had facilitated the application of the latest statistical techniques to the data collected by the Bureau of Statistics, but he was not otherwise concerned with a probabilistic understanding of human society. The science of both Fourier and Laplace exercised the mind of young Quetelet, who sought to extend these methods to human affairs. In doing so, he latched onto an analogy promoted by Laplace, who claimed that just as the law of universal gravitation subtends order in the physical realm, the eternal laws of truth, justice, and humanity maintain order in the civil realm. Laplace had investigated these eternal laws, if only in a limited sense, when he used the laws of probability to ascertain how judicial error depends on the number of jurors.

Quetelet believed that statistical analysis would identify the laws governing civil order, and he aspired to become the “Newton of this other celestial mechanics”, which is to say, the master of the mechanics underlying the storm of social phenomena (cited by Porter, 1986, 104). One implication of Quetelet’s understanding of the laws of social phenomena, which was drawn by many in this emergent field, was that statisticians require training in advanced mathematics. Another implication was that any measurable entity related to humans and human activity was susceptible to statistical analysis, whether it be a physical quantity like body weight, or a behavioral statistic, like the incidence of violent crime.

Statistics on crime were published in France from 1827, and in the 1830s, the statistical movement grew rapidly in France and Britain. The British Board of Trade published economic statistics from 1832, while census and mortality records were collected by the newly-created General Register Office in 1837. Public hygiene was a priority in France and Britain, where statistical societies were founded in major cities, and took part in campaigns in favor of public education, sanitation, and poor-law reform. No longer reserved to specialists, statistics had entered the sphere of public discourse.

In the midst of the institutional and popular rise of statistics, Quetelet published his first major work, On Man and the Development of His Faculties, or an Essay on Social Physics (1835). One notion Quetelet introduced then found great resonance among his readers. This was the notion of the “homme moyen” or the average man (Stigler 1986). (Since Quetelet did not write about the “personne moyenne,” though he might have, this translation reflects the original usage and contemporary understanding of his writings.) What is an average man? For Quetelet, the average man was a mathematical fiction meant to represent the set of arithmetic means associated with the statistics of a homogeneous population of men. Consequently, the average man would have different characteristics for different populations.

A few years later, Quetelet made further inroads to a generalization of statistical reasoning, by considering the normal distribution to be the “natural” distribution, much like Fourier’s consideration of uni-dimensional heat flow, mentioned above. Already in 1818, the German astronomer Friedrich Bessel examined the departures from the normal distribution of 60,000 measurements of 3,222 stars, and suggested sources of variation other than simple measurement error. In other words, Bessel compared the normal distribution with observed stellar position to judge the nature of the variations in positional reports for a given star. Bessel might have taken the opportunity to evaluate the method of least squares, but he did not do so. None of his contemporaries did so, either. Perhaps the most obvious reason for this state of affairs is that there was simply no alternative at hand to the method of least squares. The idea is nonetheless present, that in some cases, the variation of an observed quantity may fruitfully be compared to a normal distribution.

| Male height | Number of males | Difference | |

|---|---|---|---|

| Measured | Calculated | ||

| Below 1m.570 | 28,620 | 26,345 | |

| 1m.570 – 1m.597 | 11,580 | 13,182 | |

| 1m.597 – 1m.624 | 13,990 | 14,502 | |

| 1m.624 – 1m.651 | 14,410 | 13,982 | |

| 1m.651 – 1m.678 | 11,410 | 11,803 | |

| 1m.678 – 1m.705 | 8,780 | 8,725 | |

| 1m.705 – 1m.732 | 5,550 | 5,527 | |

| 1m.732 – 1m.759 | 3,190 | 3,187 | |

| Above 1m.759 | 2,490 | 2,645 | |

As for Quetelet, even though he was a founder and director of the Brussels Observatory, his creative force was directed less to astronomy than to social physics. He also had great faith in the power of the central limit theorem. For instance, much as Bessel had wondered how to interpret a non-normal distribution of the observed position of a star, Quetelet expected the height of adult males in a given population to be normally distributed. When the statistics showed a distribution of height in a given population that was not normal, Quetelet inferred that there was a reason for this: an unknown cause must have intervened, resulting in a deviation from the normal distribution of height.

Quetelet noticed, in particular, that the heights of French conscription candidates, while featuring a single apex as in a normal distribution, differed from such a standard distribution because it was neither symmetric nor smooth. What could explain such an anomaly? The number of hypotheses one could introduce is nearly limitless, but Quetelet seized upon the following: bad data. His height statistics, Quetelet inferred, were marred by fraud. Quetelet knew, of course, that those 20-year-old Frenchmen shorter than 157 centimeters were exempted from conscription. For those preferring not to serve – presumably a large sub-population among French 20-year-old males – and who happened to be only slightly taller than the cutoff, the incentive to persuade the authorities to shave a centimeter or two off the measured height was apparent. As for the authorities, Quetelet reasoned that they would be willing to play along in borderline cases, since the individuals concerned, being of modest stature, would be replaced by others endowed with a “more advantageous height”.11endnote: 1 Adolphe Quetelet, Sur l’appréciation des documents statistiques, et en particulier sur l’appréciation des moyennes, Bulletin de la Commission centrale de statistique 2, 1844, 205–286, on 262.

In Quetelet’s hands, the normal distribution became a means by which to evaluate a population’s homogeneity. Some contemporaries realized this was an unjustified point of view and not just from a mathematical standpoint. Quetelet’s other ideas, including that of the average man, were also subjected to criticism. His critics, however, were drowned out by the general acclaim accorded social physics. Taking into account Quetelet’s background and the rising authority at this time of astronomers and physicists in public discourse, the popularity of his ideas is not difficult to understand. In a period of great socio-economic and political upheaval, accompanied by a tidal wave of social statistics, what he offered to social reformers was incredibly seductive: a rational foundation for shaping society. While his misconceptions were many, Quetelet’s goal of ascertaining the principles of social order through mathematical analysis was laudable and in phase with the progressive spirit of the mid-nineteenth century.

Although Quetelet’s writings on probability were widely translated, read and studied, their long-term impact was negligible, as applications of error theory made no significant gains in the social sciences until the twentieth century. Error theory remained important in astronomy and physics, the areas from which it had emerged, but biology, meteorology, geology, and empirical economics went largely untouched by it. Several reasons for this difference may be considered, but among them one stands out. In celestial mechanics, and Newtonian dynamics in general, one relies on equations of motion as a guide to studying the object under investigation, which thereby gains a certain objectivity, independent of measurement and probability. When Gauss calculated the orbit of Ceres, he fit measured positions to an elliptical orbit. While the measured positions were assumed to be slightly in error, the true position of Ceres was assumed to coincide with an elliptical orbit (barring eventual perturbations caused by the gravitational attraction of nearby planets). The same could not be said of the objects accruing to other disciplines. For example, there is no law of human development which would affirm the true height of 20-year-old Frenchmen to be, say, the median height. Likewise, longevity was not normally distributed, as humans from favorable socio-economic backgrounds live longer, on average, than those from the poorer classes. Quetelet thought he knew why: the rich are characterized by an “enlightened will …[and] habits of propriety, of temperance, of passions excited less frequently and variations less sudden in their manner of existence” (cited by Porter, 1986, 103).

Machine efficiency and mathematical economics

Other social theorists suggested, more sensibly, that wealth per se granted privileged access to adequate nutrition and clean water, which resulted in greater longevity on average. But even the founder of Marxism, Karl Marx (1818–1883) found that Quetelet’s concept of the average man could lead to a uniform standard of labor, and thereby, provide a precise foundation for the labor theory of value, in which there is one price for labor (Porter 1986, 66). In the 1870s, the labor theory of value was supplanted by the marginal theory of value, in which wages vary. The first mathematical theory of economics, Augustin Cournot’s Researches into the Mathematical Theory of Wealth (1838), borrowed heavily from the laws of physics, which had, as yet, no statistical component. Just as Quetelet believed the civil realm to be ruled by laws like those that ruled the motion of the planets, so did Cournot (1801–1877) model his rational economics on rational mechanics. Much as the productivity of a machine could be calculated from the laws of physics, based on the energy extracted in the form of work, the productivity of an enterprise was to be measured by its profit report. In both cases, maximization of productivity was the objective. The French-trained Swiss economist Léon Walras (1834–1910) took Cournot’s mechanics-based approach a step further, by introducing the notion of equilibrium. In Walras’s scheme, prices of goods interact in a perfect market based on demand curves, rationally determined by the relation between the utility of goods and their abundance. The calculation of marginal utility here is deterministic, and based on infinitesimal calculus.

The idea of extracting work from machines was one that emerged with the onset of the First Industrial Revolution. The Watt steam engine and its successors provided power to manufacturing sites, and from the turn of the nineteenth century, reciprocating steam engines drove the locomotives that delivered raw materials to these sites, and moved finished goods to markets. From the 1820s, works on the theory of machines and industrial mechanics in France drew on a century-old engineering tradition of extracting power from water, wind, and steam. In this context, the efficiency of hydraulic engines and steam engines was characterized by various means. The efficiency of a hydraulic engine was measured by its capacity to restore a volume of the motive agent to its source, which is to say, a measure of the original head of water. A hydraulic engine capable of restoring the original head in its entirety was, by definition, a perpetual-motion machine.

A comparable characterization of the efficiency of a steam engine remained elusive, until a French engineer, Sadi Carnot (1796–1832), gave the problem some thought. Sadi Carnot was the son of one of Napoleon’s ministers, Lazare Carnot, and was trained at the École polytechnique. Drawing on a number of intellectual sources, including Fourier, Carnot realized that an analogy from hydraulic engines to heat engines (such as steam engines) was at hand. In Carnot’s analogy, the impossibility of perpetual motion, expressed for hydraulic engines as the impossibility of restoring entirely the original head, has its counterpart in the ideal heat engine. Such an engine extracts work, not from a difference of head, but from a finite temperature gradient between two bodies, and conversely, it produces a temperature difference in the two bodies when work is supplied. This process is reversible, and defines the maximum efficiency of any heat engine. Also, if the two bodies are at the same temperature, the ideal heat engine does no work. Finally, unless work is applied, heat always flows “downhill”, from a warmer body to a colder one, and never “uphill”, from a colder body to a warmer one. Carnot’s principle expresses succinctly the second law of thermodynamics, elaborated three decades later by William Thomson and Rudolf Clausius, and in fact, Carnot’s Reflections on the Motive Power of Fire (1824; Fox 1986) was largely ignored both in his home country and abroad, with one important exception.

In 1834, Émile Clapeyron (1799–1864), a fellow graduate of the École polytechnique, gave Carnot’s ideas mathematical as well as graphical expression. Quickly translated into English and German, Clapeyron’s work brought attention to Carnot’s ideas from Thomson and others in the 1840s, both in thermal physics and field theory. Carnot’s concept of an ideal heat engine was likely ignored at first in part due to its novelty, and yet in one respect, it was quite traditional. Carnot simply pushed the analogy between hydraulic and heat engines too far because he thought of heat as a substance – caloric – a subtle form of matter akin to a highly elastic fluid. The caloric theory arose during the eighteenth century and was promoted by great chemist Antoine Lavoisier.

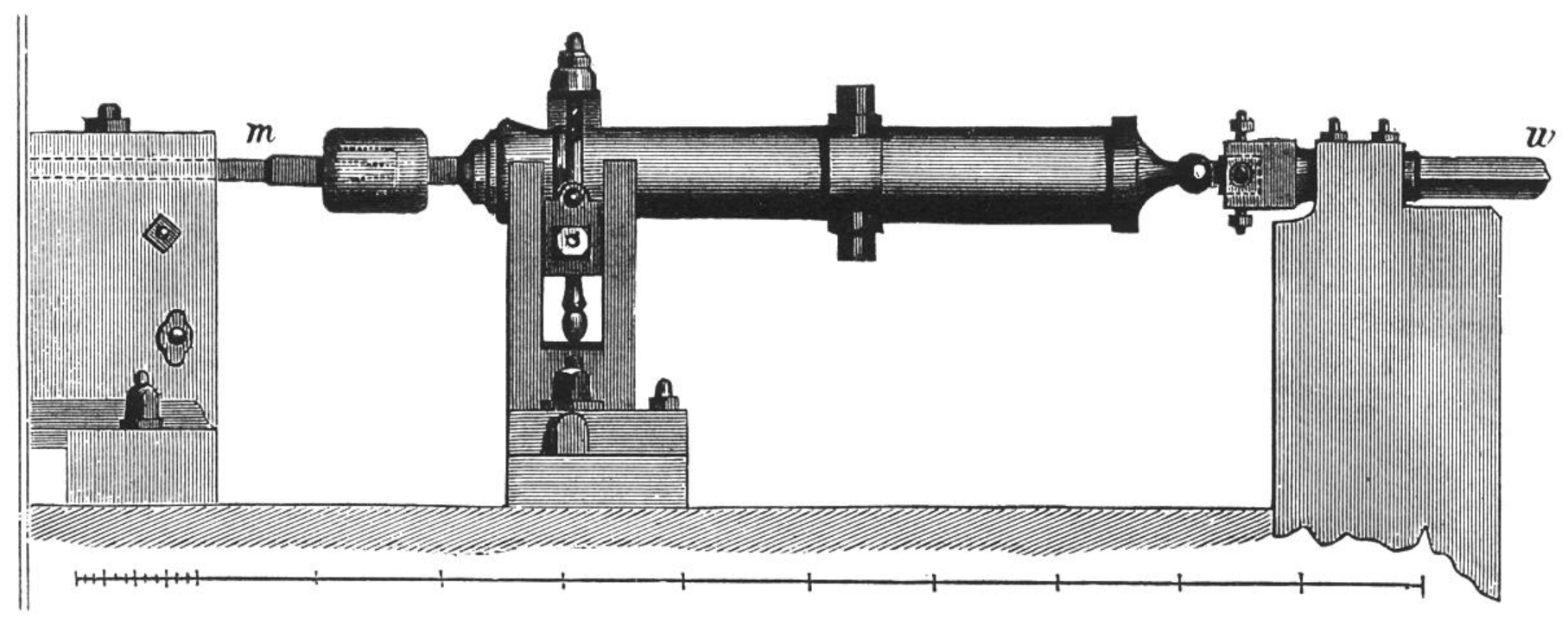

A tax-collector during the Ancien Régime, Lavoisier fell victim to the violence of the French Revolution, but his wife survived, and wed another man of science, the American expatriate Benjamin Thompson (1753–1814), Graf von Rumford. Rumford presented an argument tending at once to disprove the caloric theory of heat, and to affirm the kinetic theory. At the arsenal in Munich, where he served as superintendant, Rumford observed that the surface of a cannon became quite hot during a boring operation. This gave him the idea of a “beautiful experiment”, in which he replaced the boring head with a flat piece of steel pressed against one end of a rotating brass cannon, and immersed the contact surface in a water bath. After two and a half hours of frictional contact, with the cannon rotating at thirty-two RPM, Rumford reported that the water began to boil – to the astonishment of onlookers, there being no fire. The weight of the metal powder resulting from the experiment was less than 270 grams, and Rumford considered improbable the idea that caloric liberated from this minute quantity of material could bring about the significant increase in temperature of the water bath. He concluded that the heat generated in this case was not due to a material substance but rather to the motion of the cannon, which is to say, it was kinetic.

Thermodynamics and the kinetic theory of gases

Rumford went on to embrace theories of heat and light as wave phenomena, while in England, John Herapath (1790–1868) developed an image of heat as the motion of atoms (Brush, 1983). The absolute temperature of a gas, Herapath argued, is a direct function of molecular velocity (instead of the square of velocity, as Daniel Bernoulli had it), where molecules are modeled as elastic spheres. The caloric theory was in decline in the 1820s, but Herapath’s theory itself was plagued by inconsistencies, and gained few followers. One of these followers, however, was James Joule (1818–1889), who compared the atoms of a gas to high-speed projectiles, flying in all directions. Where Carnot’s heat engine posited the inter-convertibility of heat and mechanical work, Joule, the son of a brewer, and former student of the atomist John Dalton, performed an experiment to determine quantitatively the mechanical equivalent of heat. Joule, using a paddle-wheel immersed in an insulated jar of water, attached to a mechanism driven by two falling weights, determined the precise amount of mechanical power required to raise the temperature of a pound of water by 1° Fahrenheit. His experiments led him to hold, contrary to Carnot, that whenever work is produced by heat flow, a quantity of heat is consumed in proportion to the amount of work extracted.

Joule’s experiment impressed the German physicist Hermann Helmholtz (1821–1894), who agreed with the view that heat is a form of motion. As such, Helmholtz understood that the law-like conversion of heat to work, and vice-versa, meant that the law of conservation of mechanical vis viva could be extended to a universal conservation of energy, or in Helmholtz’s terminology, Kraft. Electricity and magnetism were subject to the principle, as well, as Helmholtz observed that the passage of electric current produces heat. In a bold generalization from extant knowledge, he predicted that all physical interactions, including chemical and organic processes, would likewise verify the principle of conservation of energy.

When Helmholtz unified all known forces under the principle of energy conservation, he discarded both the caloric theory and the notion, promoted earlier by Ampère, of heat propagation by means of wave motion, preferring instead to embrace a theory of matter in motion. Three years later, Rudolf Clausius (1822–1888) gave the principle of energy conservation mathematical expression. A former student of Dirichlet, and of the historian Leopold von Ranke, Clausius was teaching physics in Berlin when he came upon Clapeyron’s paper, which impressed him a great deal, although he found it lacking in rigor. In particular, Clausius (following Joule and Thomson) was not convinced that work could be extracted from a heat engine without some concomitant consumption. Abandoning Carnot’s assumption that no heat is lost in a cyclic process, he managed to reconcile the views of Carnot and Joule by affirming the latter’s position on the equivalence between heat and mechanical work. Clausius thus held that when an ideal heat engine produces work, it consumes a certain quantity of heat. Moreover, another quantity of heat is lost in passing from a warmer body to a colder one, and both of these quantities are proportional to the amount of work extracted. Today we recognize in these two principles the first and second law of thermodynamics, respectively.

In 1854, Clausius sharpened the expression of the second law of thermodynamics and fundamentally transformed both the science of thermodynamics as well as scientific understanding of the physical world. He noticed that Carnot’s principle expressed the motive power of fire as a difference of temperature, and not of heat. He also noticed that Carnot considered two transformations of heat: (1) the lossless heat transfer from a warmer body to a colder one, and (2) the conversion of heat from the warmer body into work. The two transformations are equivalent, Clausius claimed, provided that the heat cycle is reversible.

This equivalence of transformations led Clausius to affirm the equivalence of corresponding “equivalence-values” of such transformations. From the assumption that the product of equivalence-values of two transformations is equal to the equivalence-value of the product of their transformations, and that the total equivalence-value of transformation of a reversible cycle of a bi-thermal heat engine is zero, Clausius derived the expression

where is heat and is temperature. Clausius knew of Thomson’s definition of absolute temperature, according to which

such that is proportional to , and went on to consider reversible cyclic processes of a general form. Writing for the net heat flow from a body at temperature , this leads to

For a transformation realized by an irreversible cycle of a heat engine, the value of the integral would necessarily differ from zero. Clausius chose to assign a negative value to the integral in this case, where for any closed system,

In the reversible, quasi-static case, considering that the working fluid takes on the temperatures of the bodies exchanging heat, Clausius saw that the transformation value is a property of the fluid, and since it vanishes on completion of a cycle, it is a complete differential. From the Greek word for transformation (), Clausius introduced the term “entropy”, and taking the universe for the object system, he re-expressed the fundamental laws of thermodynamics as follows:

-

1.

The energy of the universe is constant.

-

2.

The entropy of the universe tends to a maximum.

Along with Joule and Helmholtz, Clausius felt the equivalence of heat and mechanical work to be consistent with the hypothesis that heat is due to molecular motion (Brush, 1983). Starting in 1857, Clausius introduced the kinetic theory of gases to thermodynamics with an aptly entitled paper, “On the kind of motion we call heat.” Objections to this theory, which equated the temperature of a gas with the mean value of the squared velocity of its constituent molecules, led Clausius to adopt a more sophisticated approach, relying on the statistical concept of a “mean free path”, defined as the average distance covered by a molecule before it strikes another molecule. The approach was bold, inasmuch as he had no idea how molecules interact. But Clausius made a few assumptions that seemed reasonable, and formulated what is regarded as the first approach to molecular motion as a stochastic process.

| Calculation of the mean free path A key assumption made by Clausius in his derivation of the mean free path was that of the “sphere of action”. A gas molecule moves unperturbed in a straight line until it enters the sphere of action of another molecule, where this sphere of action is fixed by the value of a radius extending from the center of the molecule. To find the probability that a point pass unperturbed through a gaseous layer of thickness , Clausius noted that, if the probability is for unit thickness, then for thickness 2 the probability is , and the probability that a molecule traverse without collision a layer of thickness is where is a positive constant. Assuming further that collision probability is just the ratio of volume swept out by the spheres of action to the volume of the gas layer of molecules with mean separation , Clausius let , such that, for small , If we denote the density per unit volume as such that , then we have The mean free path for the case of all molecules save one at rest, denoted , is then Clausius reasoned that the difference in mean free path for this case and that in which all molecules move with like speed is , such that the mean free path of the latter – more realistic – case is just By assuming a certain value for the radius of the sphere of action, Clausius found that his mean-free-path approach explained rather well the fact that smoke clouds disperse slowly when prevailing winds are gentle. |

One of Clausius’s early readers was the young James Clerk Maxwell (Flood, McCartney, Whitaker, 2014), who was familiar with the rudiments of probability theory, having read a review of Quetelet’s work published by William Herschel’s son John in 1850. Maxwell came up with a slightly different expression for the mean free path length, with only the slightest of justification: . Clausius was nonplussed, and published a correction, but in fact Maxwell’s formula follows from more rigorous arguments than the one he offered at the time, as Clausius later recognized.

Maxwell also discerned a way to determine the equilibrium distribution of molecular velocity, , such that the average number of molecules with speeds between and is . Assuming statistical independence of velocity components and isotropy of the distribution, Maxwell concluded that

where is inversely proportional to absolute temperature, and is the number of molecules. One consequence of Maxwell’s theory gave him pause: gas viscosity is independent of density. This result appeared highly counter-intuitive, but subsequent experiments, performed with the help of his wife Katherine, confirmed the mathematical result, and did much to recommend kinetic theory to physicists.

Maxwell was not satisfied with his derivation of the velocity distribution law, and both he and the Austrian physicist Ludwig Boltzmann (1844–1906) tried to improve upon it. In a celebrated paper of 1866, Maxwell developed the theory of transport processes in gases, concerning viscosity, heat conduction, and diffusion, based on a model in which molecules are represented by repulsive point-centers of force. Solution of the theory’s equations was beyond reach, save in the special case of a force varying as the inverse fifth power of distance. In this case, Maxwell recovered the Navier-Stokes equation for motion of viscous gases, supplying quantitative predictions relating thermal conductivity, diffusion rate, and viscosity. Later experiments, however, tended to disconfirm the temperature dependence of viscosity for the case.

Soon after Maxwell’s second kinetic theory appeared, Boltzmann generalized it to molecules with internal degrees of freedom, and allowed for influence by external forces, such as gravity (Brush, 1983). The new distribution function thus depended not only on velocity, but on potential energy, such that the relative probability of finding a molecule with potential energy at a given position is expressed in the form , where is Boltzmann’s constant, and is absolute temperature. The combination of kinetic and potential energy is given by

such that the probability of any molecular state with total energy in a gas, liquid, or solid, is .

To demonstrate the uniqueness of Maxwell’s probability distribution, Boltzmann drew on Maxwell’s method of determining collision frequency, and derived an equation that determines the temporal evolution of the probability distribution from an initial value. Boltzmann assumed the probability that two molecules with velocities and collide is equal to the product of the individual probabilities:

Every collision changes the value of for four values: two before, and two after the collision. Since the probability of each collision depends on , the temporal evolution of the probability distribution is described by an integro-differential equation of the form:

where the primes indicate post-collision velocities, and accounts for inter-molecular forces. Additional terms may be introduced to account for temperature gradients, fluid velocity and external forces, leading to what was later known as Boltzmann’s equation. When Boltzmann examined the expression

he found that, as a consequence of his equation for temporal evolution of , the value of decreases strictly with time for all distributions but that of Maxwell; this is Boltzmann’s “H-theorem”. When is taken to be Maxwell’s distribution, Boltzmann noted, the value of is just the entropy of Clausius, while for other, non-Maxwellian distributions, the function can be regarded as describing non-equilibrium states.

Boltzmann’s H-theorem thus gave rise to a probabilistic interpretation of entropy, thus a statistical interpretation of the second law of thermodynamics. In the 1890s, Boltzmann argued that an irreversible approach to the state of equilibrium, as it is an entropy-increasing process, is just a transition from microstates with lower probability to microstates with higher probability, and that consequently, entropy is a measure of probability. For a macrostate probability , the entropy of the macrostate is then described as

The kinetic theory briefly fell out of favor in the 1890s, despite its great promise as a solid foundation for the theory of transport processes and its success in describing phase transitions in Van der Waals’ theory of intermolecular interactions. It also presented significant mathematical and conceptual challenges that discouraged some theorists until after the turn of the century. The outlook for kinetic theory changed for the better largely due to Max Planck’s theory of black-body radiation, which relied on Boltzmann’s statistical expression of entropy.

Field theory

Another theory of physics that went through an extended period of general neglect on the part of the scientific community was the field theory of Faraday and Maxwell (Darrigol, 2000). Michael Faraday’s field theory of electricity and magnetism, elaborated in the 1830s and 1840s, opened up new vistas for physics, but gained few adepts until the 1850s. One crucial exception was the young William Thomson (1824–1907) who in 1841, just before starting university, showed via a formal analogy how Fourier’s theory of heat propagation could be successfully adapted to problems of electric potential. Thomson modified slightly Fourier’s treatment of heat sources, by showing that any isothermal surface has an equivalent expression in terms of point sources. Earlier writers, including Gauss, Laplace, and Poisson, had considered the potential :

where is matter density (or electric density), is distance from the attracting center to the attracted point, and is the volume element, such that the gravitational (or electrical) force at the attracted point, in modern notation, is

and the potential further satisfies the equation:

Compare this to Fourier’s equations (§ Fourier’s Theory):

and

In Thomson’s analogy, point sources of heat, which result, according to Fourier’s heat equation, in a temperature profile proportional to the reciprocal of distance from the source, correspond to electric charges. Thomson showed that the equipotential surfaces generated in space by charged conductors are analogous to the isothermal surfaces of an infinite solid body through which heat is propagating.

Thomson’s analogy is all the more striking when we recall that he was still a teenager when he first formulated it in 1841. As it happened, the important theorems of his paper were already known. Yet even though C.F. Gauss, Michel Chasles, and George Green proved these earlier, none of these mathematicians had noticed the analogy between heat conduction and electrostatics. Thomson’s physical analogy worked so well that he naturally sought others, in both physics and mathematics. Upon gaining greater familiarity with Faraday’s conception of electrostatics in terms of “lines of force”, Thomson expanded his earlier analogy from Fourier heat theory to electrostatics, noting in particular that Faraday’s tubes of lines connecting conductors, which were everywhere tangent to the electric force, are formal correlates to Fourier’s lines of heat flow, everywhere orthogonal to isothermal surfaces. Thomson also noticed that the “tension” measured by Faraday in his experiments on inductive capacity, was a physical realization of Green’s “potential”, described mathematically in 1828, but never, prior to Thomson, defined in an operational sense. Faraday, for his part, was intrigued by Thomson’s analogies, and a mutually-stimulating correspondence ensued between the two men from the mid-1840s.

The meeting of Thomson and Faraday would soon shake the world, as a direct result not of table-top experiments or theoretical analogies; rather this came through their engagement with a frustrating problem in cable telegraphy. In 1851, Dover and Calais were connected by submarine cable, and plans were made for a transatlantic cable, first realized in 1858, but which poor cable design and mishandling soon rendered inoperative (Gordon, 2002). While early submarine cables promised significant commercial and military advantages, scientists, too, stood to gain from the new technology. In an effort to improve the precision of longitude measurement, the Astronomer Royal, George Biddell Airy collected data on the time lag of signals transmitted between Greenwich and the observatories in Edinburgh (540 km) and Brussels (313 km). The data showed, to Airy’s bewilderment, that despite the greater distance, Edinburgh’s signal time lag was nearly imperceptible, while for Brussels it came to about one tenth of a second. The exact cause of this difference was unclear, but the culprit was fairly obvious: the telegraph cable to Brussels was mostly subground or submarine, while the Greenwich-Edinburgh line was mostly airborne.

The anomaly of differential time lag of telegraphic signals was brought to Faraday’s attention, and he promptly explained it, in an evening lecture at the Royal Institution, on 20 January, 1854 (Hunt, 2021, 22). The signal retardation, Faraday observed, was due to the gutta-percha sheathing used to insulate the copper wires. Current conduction, for Faraday, did not occur like water running through a pipe, and in fact, Faraday wholly rejected the image of a flow of electricity. Rather, the real seat of electromagnetic phenomena resided in the space around the wire. The circular magnetic force discovered by Ørsted (§ Fourier’s Theory) constituted for Faraday a “field” in space, such that electric charges were secondary phenomena, and the field itself was primary. In the case of an insulated copper wire, the existence of current required the induction of a state of strain in the surrounding dielectric, i.e., the wire’s sheathing. According to Faraday’s theory of the electromagnetic field, the observed time lag in signal propagation along copper wires is explained qualitatively by the difference in inductive capacity of the thick sheathing of buried and submarine cables, compared to the thin sheathing of airborne cables.

Faraday’s explanation provided a compelling argument in favor of his field theory, which most physicists had ignored in previous decades. Prior to the laying of long-distance telegraph cables there was no real evidence of the propagation of electrical actions in time – electrical effects all seemed instantaneous, or nearly so. Faraday’s field theory of dielectrics, when he first advanced it in 1842, was a solution in search of a real problem.

Faraday’s Royal Institution lecture naturally attracted Thomson’s attention. In a letter to George Gabriel Stokes, Thomson, recalling his earlier adaptation of Fourier’s equations to electrostatics, now cast Faraday’s notion of specific inductive capacity in the form of a second-order differential equation:

where is the resistance per unit length, stands for capacitance, is the potential, and is the cable length. Accordingly, Thomson predicted that the time required to reach a given percentage of maximum current intensity at the far end of a cable is proportional to the square of cable length , or . Tests of time lag for relatively short lengths of insulated cable could thus be used to predict with great precision the signal time lag for very long cables, such as the 3200-kilometer transatlantic cable.

Using the data collected by Airy for the Greenwich-Brussels cable, Thomson was able to model the time lag for a transatlantic version, assuming the same cable design, but with a greatly-extended length. The result was rather bad news for the cable company, as Thomson’s model predicted a 10-second time lag for the proposed cable which, when combined with a concomitant signal deformation, drove the data-transmission rate so low that a transatlantic cable telegraph system would not be economically viable.

The general enthusiasm for the great submarine cable was not to be so easily quashed. An intrepid surgeon from Brighton contested Thomson’s “squared-length” law of signal retardation, and presented measurements that seemed to support his contention. Thomson, however, was unperturbed, and he explained to the surgeon how some of his measurements had, on the contrary, confirmed the squared-length law, while the others had failed to take into account either the finer points of his theory, or those of electrical measurement. The upshot of the exchange was that Thomson became a director of the Atlantic Telegraph Company.

Thomson’s work on submarine cable technology made him a wealthy man, in spite of the utter failure of the cable laid in 1858. The failure was attributed by Thomson to manufacturing and production errors, particularly with respect to the gutta-percha sheathing. In light of Thomson’s report, plans were made for a new-and-improved submarine cable between Ireland and Newfoundland, and investors were found to make another try. The American Civil War delayed realization of the project, until the summer of 1866. The success of this effort brought with it a knighthood for Thomson; he was subsequently elevated to the peerage, as Baron Kelvin of Largs, the first scientist to be so honored, although here his political activity in West Scotland weighed more heavily than his scientific accomplishments.

Thomson’s biographers note the symbolic power the transatlantic cable exercised on British minds in the late nineteenth century, as the technological exploit confirmed a seductive narrative of human progress, and of man’s power to subdue and control the mighty forces of nature. The Old World of Europe was now connected – “instantaneously”, as Thomson said – to the new one of the Americas, adding significantly to the reach of the electric grid, and giving rise to the image of a world connected by telegraph, railway, and ocean liners in an “electric nerve” system of a global body (The Engineer, 1857, quoted by Smith & Wise 1989, 651).

The poet Rudyard Kipling expanded on the notion of a global body in his poem “The deep-sea cables” (1893), which speaks to the spiritual reach of the newest technological wonder:

The wrecks dissolve above us; their dust drops down from afar –

Down to the dark, to the utter dark, where the blind white sea-snakes are.

There is no sound, no echo of sound, in the deserts of the deep,

Or the great grey level plains of ooze where the shell-burred cables creep.

Here in the womb of the world – here on the tie-ribs of earth

Words, and the words of men, flicker and flutter and beat –

Warning, sorrow, and gain, salutation and mirth –

For a Power troubles the Still that has neither voice nor feet.

They have wakened the timeless Things: they have killed their father Time:

Joining hands in the gloom, a league from the last of the sun.

Hush! Men talk to-day o’er the waste of the ultimate slime.

And a new Word runs between: whispering, ‘Let us be one!’

Kipling’s affirmation that cable telegraphy killed time involves a measure of poetic license. According to Thomson’s telegraphy equation, confirmed by the message transmission rate of eight words per minute achieved by the transatlantic submarine cable completed in 1866, it still took time to transmit messages from the Old World to the the New World. Thanks to the cable, the time for a message to cross the Atlantic decreased from ten days (by steamship) to a matter of minutes. For Kipling, messages passing along the ocean floor in the darkness represented the beginning of a new era, one in which continents are united, albeit in the gloomy Victorian age characterized by another result of Thomson’s incessant calculations: the death of the Sun.

On the basis of the first law of thermodynamics, combined with a conjecture concerning the source of the Sun’s energy, and an estimation of its radiation rate, Thomson announced that the Sun had radiated energy for less than 100 million years, and that its remaining life was no greater than a few million years. He also made an independent estimate of the age of the Earth’s mantle. Using Fourier’s heat theory and temperature gradients measured in mines and boreholes, Thomson found a cooling period of 98 million years for the mantle, from an original molten state. Just a few years before Thomson communicated these results, Charles Darwin (1809–1882) published On the Origin of Species (1859), where he estimated, rather hastily, that the age of the Earth was greater than 300 million years. Thomson’s view clearly ruled out Darwin’s theory on physical grounds, and induced many readers to join Thomson in discounting Darwinian evolution. The death of the Sun, while not imminent according to Thomson’s calculation, was nonetheless a depressing prospect for the cultural mavens of the Victorian Age, resonating in poems by Matthew Arnold, Rudyard Kipling and others. The popular success of Darwin’s subsequent book, The Formation of Vegetable Mould (1881) has been linked by the literary critic Gillian Beer to the power of Darwin’s imagery of the nearly blind worm, toiling in subterranean pathways, which worked, in minds of Victorian readers, as an antidote of sorts to Thomson’s troubling prediction of the death of the Sun.22endnote: 2 Gillian Beer, ‘The Death of the Sun’: Victorian solar physics and solar myth, in Bullen (1989, 159). Kipling’s blind white sea-snakes and message-carrying telegraph cables exercised a similar effect on contemporary readers, countering the anxieties brought on by the result of Thomson’s calculation of the Sun’s dwindling energy.

By the 1860s, the commercial importance of submarine cable telegraphy for Britain’s empire helped resuscitate Faraday’s field theory, which was no longer viewed as an arcane and fanciful model. As the century grew older, field theory and its inventors came to be revered as national treasures. In a somewhat more subtle fashion, the power of science and mathematics to solve real-world problems was recognized among engineers, and not only only telegraph engineers. Technical societies and engineering schools flourished in the latter half of the nineteenth century, in what is known as the Second Industrial Revolution. The spread of wired telegraphy from the 1850s, and the development of related electrical measurement devices, underlined the utility of standardized units of measurement.

In response to the spread of electrical instruments and machinery, in the early 1860s Thomson called for the formation of a BAAS committee on standards of electrical resistance. The new standards were to respect the principle of “absolute” units proposed decades earlier by Gauss and Wilhelm Weber. This meant that the definition of such units has to be independent of forces that vary from place to place, such as the attraction of gravitation, or the intensity of the Earth’s magnetic field. The committee succeeded in proposing electrical units, although these were not widely followed at first. Nonetheless, some elements of the committee’s recommendations were adopted by the first international electrical congress held in Paris in 1881, such as the nomenclature of “ohms” and “volts” alonside the ampere for current and the coulomb for charge (a current of 1 ampere per second produces 1 coulomb of charge). Maxwell was an active member of the commission, having become head of Cambridge’s Cavendish Laboratory.

By the mid-1850s, Maxwell had become a leading exponent of Thomson’s approach to theorizing via “physical analogies.” Thus, he applied Fourier’s heat theory to electrostatics, and used Green’s potential function to characterize Faraday’s theory of dielectrics, among other powerful analogies. The young Maxwell found physical analogy to be well-suited for realizing progress in theoretical physics. He described his method as a middle-ground of sorts, between a purely mathematical approach, on the one hand, and the formulation of physical hypotheses, on the other. This led him to embrace several fruitful physical analogies, including that between light undergoing refraction and a charged particle moving in a strong field of force, and a three-way analogy between light, the vibrations of an elastic medium, and electricity.

The physical analogy that Maxwell focused upon in his groundbreaking paper of 1855, “On Faraday’s lines of force”, was one between a system of electrical and magnetic poles with inverse-square interaction, and a field of incompressible fluid flowing in tubes, the tubes being assimilated to Faraday’s lines of force. Whereas Thomson had exercised prudence in his exploration of physical analogy, Maxwell pursued these with vigor, as this paper clearly reveals. Faraday, in his experiments in electrochemistry and on dielectrics, sought to measure electrical force along and across lines in terms of intensity and quantity, where “intensity” referred to the tension that causes electric current, and “quantity” referred to the current strength, and to the integral current produced by a charged condenser. These concepts were central to Faraday’s account of force and flux, which were the basis of his theory of electrification. In Maxwell’s mind, quantity referred to the number of tubes of force intersecting a surface, while intensity referred to the number of surfaces intersected by a given tube of force.

Maxwell went on to develop a mechanical model of the electromagnetic field, inspired in part by Thomson’s image of space-filling molecular vortices. Alignment of such vortices, Thomson suggested, resulted in magnetization of matter. Maxwell’s mechanical model likewise called on vortices, to illustrate both magnetism and electric current, the latter being generated by a “layer of particles, acting as idle wheels”, rolling without sliding between the cellular vortices of the elastic, space-filling medium. The resulting system of field equations, equivalent to what would later be known as “Maxwell’s equations”, unified electrostatics and electrodynamics, but that was not all. Following Thomson’s lead, Maxwell considered transverse waves in the medium, which he assumed was composed of spherical cells, whose elasticity arises from intermolecular forces. The velocity of these transverse waves turned out to be the velocity of light (Siegel, in Cantor & Hodge 1981).

While Maxwell’s argument in favor of a common foundation of optics and electrodynamics was later revealed to be faulty, in the meantime, he shelved his magneto-mechanical model in favor of a Lagrangian foundation for his field equations, thereby realizing the unification suggested by the earlier model. Maxwell’s ambitious theory inspired a number of British physicists to explore its consequences, both physical and mathematical, although Thomson considered it to be a step backward. Over the years, these “Maxwellians”, as they called themselves, convinced many of their compatriots to forgo the electric fluids and action-at-a-distance of old, in favor of field-theoretic concepts. On the Continent, however, where alternative approaches held sway, Maxwell’s theory sparked little interest. Helmholtz was a notable exception, and in 1879 he encouraged his student Heinrich Hertz (1857–1894) to engage with British field theory. A brilliant experimenter and theorist, Hertz recognized that a source of high-frequency electric oscillations could provide decisive evidence of the validity of Maxwell’s theory. While preparing his lectures on electricity and magnetism at the University of Karlsruhe, Hertz noticed a spark discharge phenomenon which suggested the existence of rapid electric oscillations. Hertz quickly mastered a device for producing such oscillations in a controlled manner, and used it first to generate electromagnetic waves on wires, and then, in 1887, electromagnetic waves in air.

Hertz’s experimental setup was not difficult to replicate, so within a short time physicists across Europe confirmed the existence of electromagnetic waves. Hertz’s discovery came as no surprise to Maxwellians, but others found it quite shocking. Almost overnight, it launched a new field of research, as physicists, engineers, and mathematicians began investigating phenomena associated with Hertzian waves. Within a few years, they also began contemplating military and commercial exploitation of wireless waves in air, as Guglielmo Marconi and others filed the first patents on wireless telegraphy. By 1902, telegraph messages were not only snaking across the ocean floor in submarine cables, they were flying through the air – and around the world – on Hertzian waves.

Notes

References

- Statistical Physics and the Atomic Theory of Matter, from Boyle and Newton to Landau and Onsager. Princeton University Press, Princeton. Cited by: Thermodynamics and the kinetic theory of gases, Thermodynamics and the kinetic theory of gases, Thermodynamics and the kinetic theory of gases.

- The Sun is God: Painting, Literature, and Mythology in the Nineteenth Century. Oxford University Press, Oxford. Cited by: endnote 2.

- Conceptions of Ether: Studies in the History of Ether Theories 1740–1900. Cambridge University Press, Cambridge. link1 Cited by: Field theory.

- Electrodynamics from Ampère to Einstein. Oxford University Press, Oxford. link1 Cited by: Field theory.

- James Clerk Maxwell: Perspectives on his Life and Work. Oxford University Press, Oxford. Cited by: Thermodynamics and the kinetic theory of gases.

- Sadi Carnot, Reflections on the Motive Power of Fire: A Critical Edition with the Surviving Scientific Manuscripts. Manchester University Press, Manchester. link1 Cited by: Machine efficiency and mathematical economics.

- A Thread Across the Ocean: The Heroic Story of the Transatlantic Cable. Simon & Schuster, New York. Cited by: Field theory.

- Convolutions in French Mathematics, 1800–1840, Volume 1: The Settings. Birkhäuser, Boston/Basel. Cited by: Fourier’s theory of heat propagation.

- Imperial Science: Cable Telegraphy and Electrical Physics in the Victorian British Empire. Cambridge University Press, Cambridge. Cited by: Field theory.

- Cosmos: An Illustrated History of Astronomy and Cosmology. University of Chicago Press, Chicago. Cited by: Interpreting statistics and calculating probability.

- The Rise of Statistical Thinking, 1820–1900. Princeton University Press, Princeton. Cited by: Adolphe Quetelet’s social physics, Adolphe Quetelet’s social physics, Adolphe Quetelet’s social physics, Machine efficiency and mathematical economics, Describing and understanding the world: from probability and statistics to field theory Preprint of a chapter published in T. Archibald & D. E. Rowe, eds, A Cultural History of Mathematics, Volume 5: A Cultural History of Mathematics in the Nineteenth Century, London, Bloomsbury, 2024, Describing and understanding the world: from probability and statistics to field theory Preprint of a chapter published in T. Archibald & D. E. Rowe, eds, A Cultural History of Mathematics, Volume 5: A Cultural History of Mathematics in the Nineteenth Century, London, Bloomsbury, 2024.

- Energy and Empire: A Biographical Study of Lord Kelvin. Cambridge University Press, Cambridge. Cited by: Field theory.

- Exploratory Experiments: Ampère, Faraday, and the Origins of Electrodynamics. University of Pittsburgh Press, Pittsburgh. Cited by: Fourier’s theory of heat propagation.

- The History of Statistics: The Measurement of Uncertainty before 1900. Harvard University Press, Cambridge MA. link1 Cited by: Interpreting statistics and calculating probability, Adolphe Quetelet’s social physics.

- Statistics on the Table: The History of Statistical Concepts and Methods. Harvard University Press, Cambridge MA. Cited by: Interpreting statistics and calculating probability.

- The General History of Astronomy, Volume 2, Planetary Astronomy from the Renaissance to the Rise of Astrophysics, Part B: The Eighteenth and Nineteenth Centuries. Cambridge University Press, Cambridge. Cited by: Interpreting statistics and calculating probability.